ARTIFICIAL INTELLIGENCE

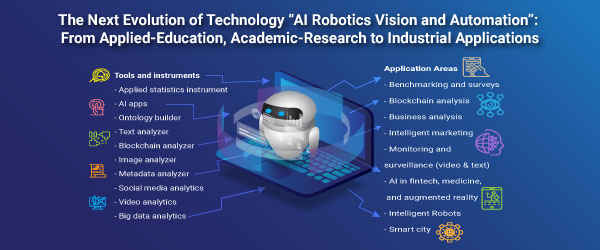

We simulate human intelligence in machines, and integrate artificial intelligence and statistical methods to invent new AI algorithms and models.

We programme our invented methods into AI tools for information clustering, classification, grouping, relating, reasoning, and searching.

We do consultancy with our invented AI tools for analyzing business's rules, processes and practices, for identifying the business risks and bottomnecks,

for learning and capturing business's tacit knowledge into explicit knowledge, for predicting unforeseen problems, and for optimizing decision makings to enhance wisdow management of the businesses.

Recent Development

"Using a Novel Logic Gate with EfficientNets to Solve Fine-Grained Problem of Object Identification and Classification" with Marvel Digital AI Ltd

"Using Three-Layer Detector for Seal Recognition and Verification" with PwC Mainland China and Hong Kong

ZHAO, J.Y., FANG, Y.W. and LI, G.D. (2021). Recurrence along Depth: Deep Convolutional Neural Networks with Recurrent Layer Aggregation. 35th Conference on Neural Information Processing Systems (NeurIPS 2021)

GU, J.Q., YIN, G. (2021) Crystallization Learning with the Delaunay Triangulation Thirty-eighth International Conference on Machine Learning (ICML 2021)

WANG, D., HUANG, F.Q., ZHAO, J.Y., LI, G.D. and TIAN, G.J. (2020) Compact Autoregressive Network Proceedings of the AAAI Conference on Artificial Intelligence. 34, 6145-6152. doi:10.1609/aaai.v34i04.6079

ZHAO, J.Y., HUANG, F.Q., LV, J., DUAN, Y.J., QIN, Z., LI, G.D., TIAN, G.J. (2020) Do RNN and LSTM have Long Memory? Proceedings of the 37th International Conference on Machine Learning, PMLR 119:11365-11375

TU, W., LIU, P., ZHAO, J.Y., LIU, Y., KONG, L.L., LI, G.D., JIANG, B., TIAN, G.J. and YAO, H.S. (2019) M-estimation in Low-Rank Matrix Factorization: A General Framework 2019 IEEE International Conference on Data Mining (ICDM). IEEE, 568-577. doi:10.1109/ICDM.2019.00067

CHEN, J., YANG, H. and YAO, J.F. (2018). A new multivariate CUSUM chart using principal components with a revision of Crosier's chart. Communications in Statistics: Simulation and Computation, 47(2), 464-476.

FANG, Y., XU, J. and YANG, L. (2018). Online Bootstrap Confidence Intervals for the Stochastic Gradient Descent Estimator. Journal of Machine Learning Research, 19:1-21.

LEE, M.S. and WU, Y. (2018). A bootstrap recipe for post-model-selection inference under linear regression models. Biometrika, 105(4), 873-890.

LI, W.M. and YAO, J.F. (2018). On structure testing for component covariance matrices of a high dimensional mixture. Journal of the Royal Statistical Society. Series B: Statistical Methodology, 80(2), 293-318.

SHEN, K., YAO, J.F. and LI, W.K. (2018). On a spiked model for large volatility matrix estimation from noisy high-frequency data. Computational Statistics & Data Analysis, 131, 207-221.

WEI, B., LEE, M.S. and WU, X. (2016). Stochastically optimal bootstrap sample size for shrinkage-type statistics. Statistics and Computing, 26, 249-262.

SOLEYMANI, M. and LEE, M.S. (2014). Sequential combination of weighted and nonparametric bagging for classification. Biometrika, 101(2), 491-498.

XU, J. and YING, Z.L. (2010). Simultaneous estimation and variable selection in median regression using Lasso-type penalty. Annals of the Institute of Statistical Mathematics, 62(3), 487-514.

WANG, H.S., LI, G.D. and JIANG, G.H. (2007). Robust Regression Shrinkage and Consistent Variable Selection through the LAD-Lasso. Journal of Business and Economic Statistics, 25(3), 347-355.

WANG, H. S., LI, G.D. and TSAI, C.L. (2007). Regression coefficient and autoregressive order shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B, 69(1), 67-78.